Kevin Anderson has a new talk in Sweden up on Youtube.

Kevin Anderson talks for 19 minutes. Then there is a panel discussion.

Here is the talk background from the University of Uppsala.

Robin Johnson's Economics Webpage has moved to https://theecanmole.github.io/Robin-Johnsons-Economics-Web-Page/

Kevin Anderson has a new talk in Sweden up on Youtube.

Kevin Anderson talks for 19 minutes. Then there is a panel discussion.

Here is the talk background from the University of Uppsala.

Who remembers the headline announcement of the proposal? - that there would be a 'target', that 90% of rivers and lakes would be swimmable by 2040?

The environmental NGOs were very critical of the target (and the proposal as a whole).

The Green Party said the new swimmable standard was just shifting the goalposts.

Forest & Bird's Kevin Hague described the proposal as a reduced swimmability standard.

Marnie Prickett of the Choose Clean Water group described the proposal as "fraud" as it intended to change the definition of swimmable to meet a lower standard.

The environmental NGO's argument was that the new proposed 'risk' standard for swimming (expressed in E Coli as an indicator of faecal matter and pathogens) allowed a one in a twenty probability of getting sick when the old standard was a much more precautionary one in a hundred probability of getting sick.

Dr Siouxsie Wiles and Dr Jonathan Marshall explained that the change in risk wasn't quite as simple as that. As did University of Auckland Professor of Biostatistics Thomas Lumley.

However, I thought there was something wrong with that 90 percent number. I seemed to recall Green MP Eugenie Sage saying in 2014 that more than 60 percent of the monitored river swimming sites were unfit for swimming.

The Clean Water package 2017 included this barchart which shows that the 90% 'swimmable' target (and five new swimming quality categories from 'excellent' to 'poor') are actually expressed in a different variable: length of river measured in kilometres (not in number of monitoring sites).

It also shows, in the left-most bar, that the use of the use of the 'length of river' variable in place of numbers of river monitoring sites, results in a very different result.

On the basis of recent data, 72 percent of kilometres of rivers currently meet the 'swimmable' standard (the sum of the 'Fair', 'Good' and 'Excellent' quality categories. Expressing the results in kilometres of river lengths and not in numbers of sampling sites immediately enables a more positive spin to be put on the results.

The underlying data must be water quality sampling results from NIWA's National Rivers Water Quality Network (NRWQN) and sites operated by regional councils.

So, way back on 15 March 2017, I asked for the underlying sampling data from the water quality monitoring sites.

I felt I had expressed my official information request sufficiently clearly to get a reply in a reasonable time.

On your website on the page "Clean Water package 2017" there is a bar chart explaining the target of 90% of rivers and lakes swimmable by 2040 included in the report "Clean Water, ME 1293". The bar chart is also on page 11 of report "Clean Water, ME 1293". The bar chart shows kilometres (which I assume are lengths of segments of rivers) in each of the five 'quality' categories (Poor, Intermittent, etc) with a time variable which has three bars; "Current", "2030" and "2040".Will you please provide me with the underlying data; which I assume must be water quality monitoring site results (and future predictions for 2030 and 2040) analysed by the five quality categories and the three time categories "Current", "2030" and "2040". Will you also please include the name or number of each monitoring site, its region and for the "Current" selection, the sampling period for the actual E Coli counts. Please provide this data either in comma separated values or Excel 2007 format via the FYI website.

However, I had to lodge a complaint with the Office of the Ombudsmen to eventually obtain the data. That only happened after the investigator from the Office of the Ombudsmen brokered a deal with the Ministry for the Environment. He rang me and said that the Ministry didn't want to give me the data in either .csv or .xls format as I'd requested as the data was in a special binary format; .rdata, specific to a certain statistical programming language named after the letter 'R'.

In other words, it appeared to me the Ministry were claiming that a 'technical' problem in providing me the data I had requested, and not a problem of intent to frustrate the information request.

Sure, it's fair enough to take the Ministry at their word that they didn't intend to delay and frustrate my request. However, whatever the intention, it was still a delay from my point of view as the requester.

I told the investigator I would be happy to get the data in .rdata format. I also expressed the view that it would have only been a very short line of 'R' script to convert the .rdata formatted file into .csv format. And that it was a weak reason for the delay and for not providing me the data in .csv format. I observed that the Ministry's response was pretty unsatisfactory from an open data perspective. The investigator said he couldn't comment on open data issues, as we were in an official information space.

I was finally emailed the data in .rdata format by the Manager, Executive Relations, on 5 July 2017.

I used this R script;

to write the .rdata file to a .csv file.

The .rdata file is WQdailymeansEcoli.rdata at Google Drive.

The .csv format file is WQdailymeansEcoli.csv at Google Drive.

Now I just need to find the time to analyse the sampling sites data.

The photograph to the left is of Upper Nina Biv.

With three old friends I spent four nights and three days in the Lake Sumner Forest Park.

From 8 July to 11 July 2017 we tramped up the Nina River, to Nina Hut. We day-tripped to Upper Nina Bivvy and then crossed Devilskin Saddle (after visiting Devils Den Bivvy) to the Doubtful River and we stayed our last night at Doubtless Hut.

Finally we walked out to State Highway 7 and drove to my friends' relatively new 'bach' at St Arnaud just before snowfalls closed Lewis Pass.

I have popped my ten better photos to an album on Flickr. And the photo below is the result of some 'Flickr 'embed' script.

What did I learn and bring back from the tramp?

I could say that oh: I took too much food (I had some left at the end). I needn't have taken a pair of fleece pants (yes it was cold, we all tramped in 'polypro' tights but a spare dry pair of tights would have been lighter). And never omit your sleeping mat! (It should always be in your pack even on a 'huts' trip and even more so in winter!)

But that's just a checklist for next time.

When the trip was being planned I said I'd only tramped in the area once or twice. Actually I'd temporarily forgotten I had done a lot of tramps between Lake Sumner and Lewis Pass. The trip evoked a lot of memories and a lot of yarns ("There was another trip I may not have mentioned, where we bush-bashed up to Brass Monkey Biv..") which my long suffering tramp companions politely heard out.

Even little-suspecting fellow trampers we met heard a few yarns! We meet two wonderful women teachers from Christchurch who were outdoor instructors. It of course turned out we knew friends in common and that launched more tall tales from our respective archives.

The big wake-up for me was that although I do look like a bald and white-haired 55 year-old with a lined-face, I got that way by doing a lot of tramps and other adventurous things!

And also that my old tramping friends are still my bestest-ever friends.

It shows the greenhouse gas emissions by sectors of the economy. It includes 'negative' emissions, more properly called carbon removals, or carbon sequestration or simply carbon sinks. This is the sum of all the carbon dioxide taken out of the atmosphere by the sector of the economy called Land use, Land use change and Forestry.

Here is the chart.

This time I took a more traditional R approach to getting the the data into R from the Excel file CRF summary data.xlsx.

First, I used opened an X terminal window, and used the Linux wget command to download the spreadsheet "2017 CRF Summary data.xlsx" to a folder called "/nzghg2015".

I then used ssconvert (which is part of Gnumeric) to split the Excel (.xlsx) spreadsheet into comma-separated values files.

The Excel spreadsheet had 3 work sheets, 2 with data, and 1 that was empty. So there's now a .csv file for each sheet, even the empty sheet. And we read in the .csv file for emissions by sector.

The final step is to make the chart.

Minister Bennett and the Ministry have as their headline Greenhouse gas emissions decline.

I thought would I whip up a quick chart from the new data with R.

I pretty much doubted that there was any discernible decline in New Zealand's greenhouse gas emissions to justify Bennett's statement. We should always look at the data. Here is the chart of emissions from 1990 to 2015.

Although gross emissions (emissions excluding the carbon removals from Land Use Land Use Change and Forestry (LULUCF)) show a plateauing since the mid 2000s, with the actual gross emissions for the last few years sitting just below the linear trend line.

Gross 2015 emissions are still 24% greater than gross 1990 emissions.

For net emissions (emissions including the carbon removals from Land Use Land Use Change and Forestry) the data points for the years since 2012 sit exactly on the linear trend line. Net 2015 emissions are still 64% greater than net 1990 emissions.

There was of course more data wrangling and cleaning than I remembered from when I last made a chart of emissions!

The Ministry for the Environment's webpage for the Greenhouse Gas Inventory 2015 includes a link to a summary Excel spreadsheet. The Excel file includes two work-sheets.

One method of data-cleaning would be to save the two work sheets as two comma-separated values files after removing any formatting. I also like to reformat column headings by either adding double-speech marks or by concatenating the text into one text string with no spaces or by having a one-word header, say 'Gross' or 'Net'.

Of course, that's not what I did in the first instance!

Instead, I copied columns of data from the summary Excel sheet and pasted them into Convert Town's column to comma-separated list online tool. I then pasted the comma-separated lists into my R script file for the very simple step of assigning them into numeric vectors in R. Which looks like this.

Then the script for the chart is:

The result is that the two pieces of R script meet a standard of reproducible research, they contain all the data and code necessary to replicate the chart. Same data + Same script = Same results.

I also uploaded the chart to Wikimedia Commons and included the R script. Wikimedia Commons facilitates the use of R script by providing templates for syntax highlighting. So with the script included, the Wikimedia page for the chart is also reproducible. Here is the Wikimedia Commons version of the same chart.

For comparison, here is my equivalent chart of greenhouse gas emissions for 1990 to 2010. Gross emissions up 20% and net emissions up 59%.

What can I say to sum up - other than Plus ça change, plus c'est la même chose.

This post features the atmospheric carbon dioxide data package. Again, it is one of the Open Knowledge International (OKFN) Frictionless Data core data packages, that is to say it is one of the

"Important, commonly-used datasets in high quality, easy-to-use & open form".

The data is known as the Keeling Curve after the American chemist and oceanographer Charles Keeling. It is an iconic image for anthropogenic climate change.

Like the global temperature data package, the atmospheric carbon dioxide data package is open and tidy and self-updating and resides in an underlying Github data package .

Similarly, the data package can be downloaded as a zip file and unzipped into a folder. That will include the data files in .csv format, an open data licence, a read-me file, a json file and a Bash script that updates the data from source.

I can run the Bash script file on my laptop in an X-terminal window and it goes off and gets the latest data and formats it into 'tidy' csv format files.

Here is a screenshot of the script file updating and formatting the data.

Here is my chart.

Here is the R code for the chart.

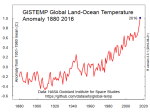

Radio New Zealand reports that 2016 was the new record warmest year in the instrumental record, so I will pitch in too. But with an extra touch of open data and reproducible research.

Radio New Zealand reports that 2016 was the new record warmest year in the instrumental record, so I will pitch in too. But with an extra touch of open data and reproducible research.It's been a while since I uploaded a chart of global temperature data. Not since I made this graph in 2011 and then before that was this graph from 2010. So it's about time for some graphs. Especially since 2016 was the world's warmest year as well as New Zealand's warmest year.

When I made those charts, I had to do some 'data cleaning' to convert the raw data to tidy data (Wickham, H. 2014 Sept 12. Tidy Data. Journal of Statistical Software. [Online] 59:10), where each variable is a column, each observation is a row, and each type of observational unit is a table. And to convert that table from text format to comma separated values format.

I would have used a spreadsheet program to manually edit and 'tidy' the data files so I could easily use them with the R language. As Roger Peng says, if there is one rule of reproducible research it is "Don't do things by hand! Editing data manually with a spreadsheet is not reproducible".

There is no 'audit trail' left of how I manipulated the data and created the chart. So after a few years even I can't remember the steps I made back then to clean the data! That then can be a disincentive to update and improve the charts.

However, I have found a couple of cool open and 'tidy' data packages of global temperatures that solve the reproducibility problem. The non-profit Open Knowledge International provides these packages as as part of their core data sets.

One package is the Global Temperature Time Series. From it's web page you can download two temperature data series at monthly or annual intervals in 'tidy' csv format. It's almost up to date with October 2016 the most recent data point. So that's a pretty good head start for my R charts.

But it is better than that. The data is held in a Github repository. From there the data package can be downloaded as a zip file. After unzipping, this includes the csv data files, an open data licence, a read-me file, a .json file and a cool Python script that updates the data from source! I can run the script file on my laptop and it goes off by itself and gets the latest data to November 2016 and formats it into 'tidy' csv format files. This just seems like magic at first! Very cool! No manual data cleaning! Very reproducible!

Here is a screen shot of the Python script running in a an X-terminal window on my Debian Jessie MX-16 operating system on my Dell Inspiron 6000 laptop.

The file "monthly.csv" includes two data series; the NOAA National Climatic Data Center (NCDC), global component of Climate at a Glance (GCAG) and the perhaps more well-known NASA Goddard Institute for Space Studies (GISS) Surface Temperature Analysis, Global Land-Ocean Temperature Index.

I just want to use the NASA GISTEMP data, so there is some R code to separate it out into its own dataframe. The annual data stops at 2015, so I am going to make a new annual data vector with 2016 as the mean of the eleven months to November 2016. And 2016 is surprise surprise the warmest year.

Here is a simple line chart of the annual means.

Here is a another line chart of the annual means with an additional data series, an eleven-year lowess-smoothed data series.

Here is the R code for the two graphs.